When working with industrial cameras such as Basler, you don’t always want to run the camera in free-run mode (continuous acquisition). There are many situations where you need to capture exactly when something meaningful happens, for example:

- The moment a button on an experimental setup is pressed

- When a temperature or voltage reaches a certain threshold

- When an external event occurs in another device or system

In such cases, software-triggered acquisition is extremely useful.

In this article, we’ll look at how to perform software-trigger capture using the Basler pylon SDK in C#,

and compare it against free-run / GrabOne() acquisitions using simple stopwatch-based timing.

✅ Environment

| Item | Value |

|---|---|

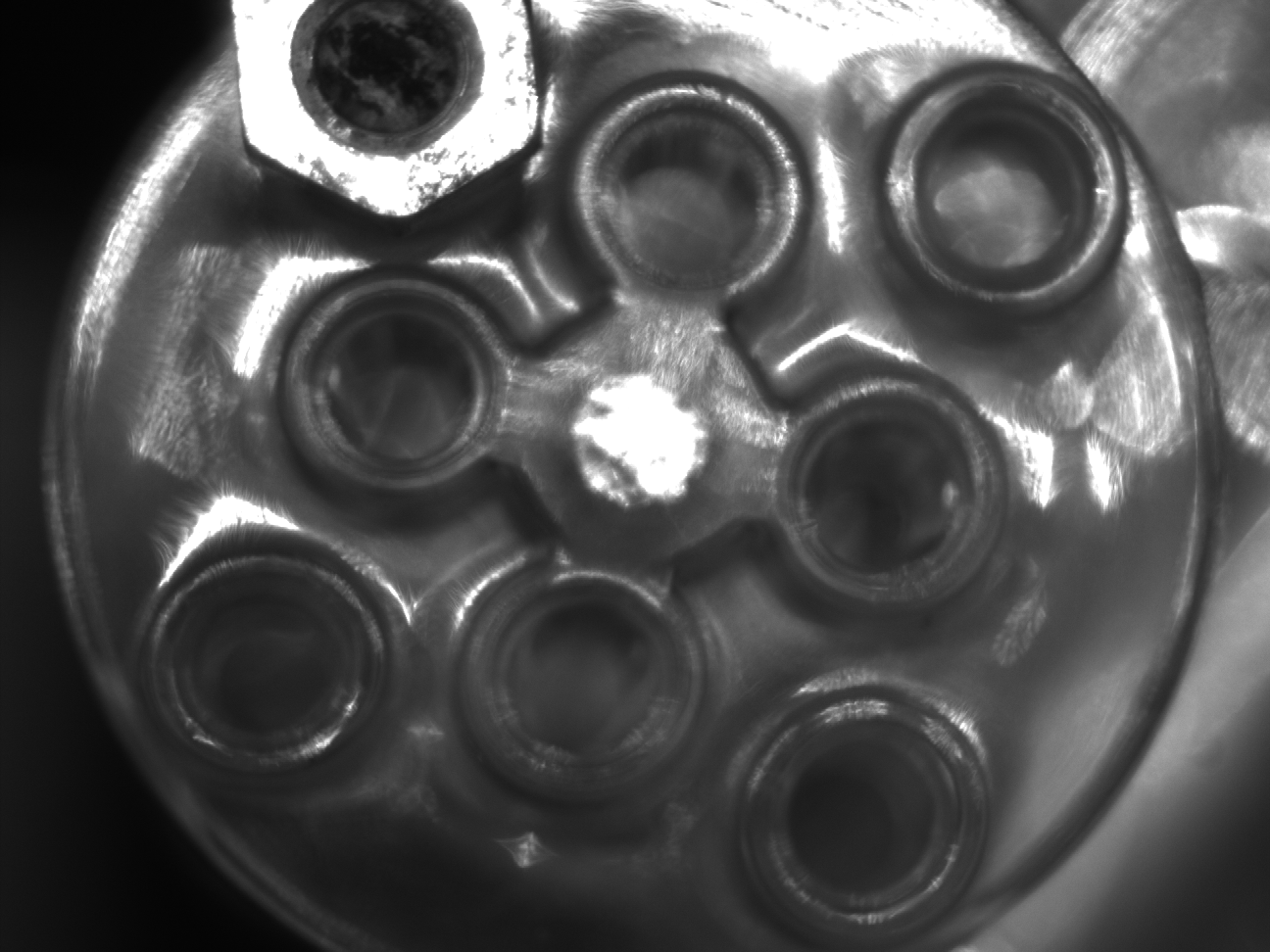

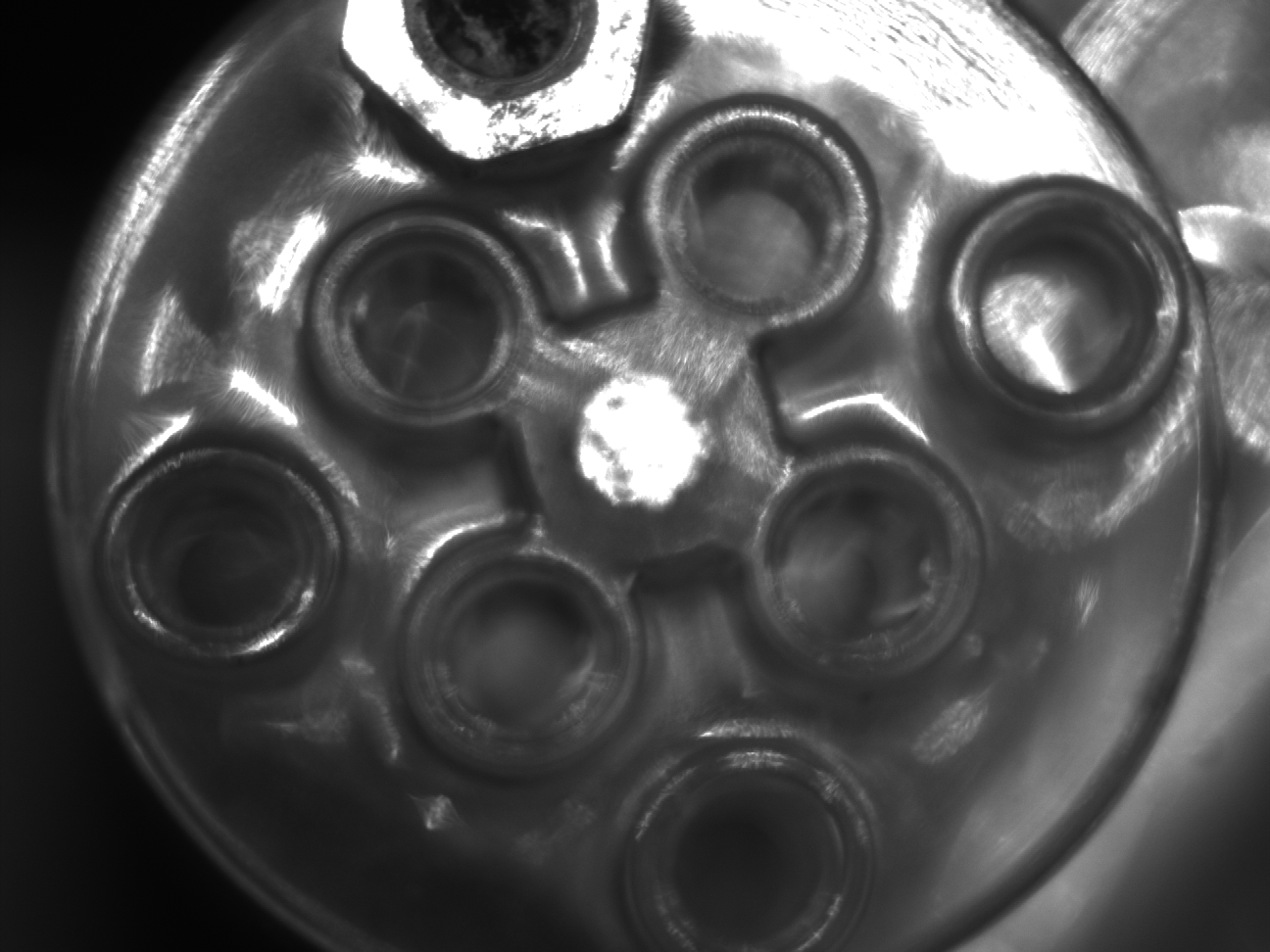

| Camera | Basler acA2500-14gm |

| SDK | Basler pylon Camera Software Suite |

| Lang | C# / .NET 8.0 (Windows) |

As in previous articles (for example:

How to record camera settings alongside captured images (C# / .NET)),

we extend the same BaslerCameraSample class and add software-trigger-related functions plus an example test.

🔧 Configuring the Software Trigger

In pylon, software trigger is configured by setting the trigger mode and trigger source as follows:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

public bool SetSoftwareTrigger(bool enable) { if(Camera == null) return false; if (enable) { // Set the trigger source to Software. var isTriggerSourceSet = SetPLCameraParameter(PLCamera.TriggerSource, PLCamera.TriggerSource.Software); // Check if trigger source was set successfully. if (!isTriggerSourceSet) { Console.WriteLine(“Failed to set software trigger source.”); return false; } // Enable trigger mode. return SetPLCameraParameter(PLCamera.TriggerMode, PLCamera.TriggerMode.On); } else { // Disable trigger mode (free-run). return SetPLCameraParameter(PLCamera.TriggerMode, PLCamera.TriggerMode.Off); } } |

When you want to capture an image, you call ExecuteSoftwareTrigger() to fire the trigger and acquire a frame at that instant:

|

1 2 3 4 5 6 7 8 |

// Fire a software trigger to acquire a single frame. public void ExecuteSoftwareTrigger(int timeoutMs = 5000) { if (Camera?.WaitForFrameTriggerReady(timeoutMs, TimeoutHandling.ThrowException) == true) { Camera?.ExecuteSoftwareTrigger(); } } |

Before calling ExecuteSoftwareTrigger, you must start camera streaming with Camera.StreamGrabber.Start().

In other words, the camera must already be armed and grabbing when the trigger is executed.

🔁 Example: Using the Software Trigger

Here is one example of how to use the software trigger. There are many ways to structure this, but using the existing implementation from earlier articles, we follow this sequence:

- Enable software trigger mode

- Start streaming

- Fire the software trigger

- Wait for frame acquisition (via

ImageGrabbed) and then stop streaming

Because there is a delay between firing the trigger and the ImageGrabbed event,

we wait until the handler confirms that a frame was actually acquired before stopping streaming.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 |

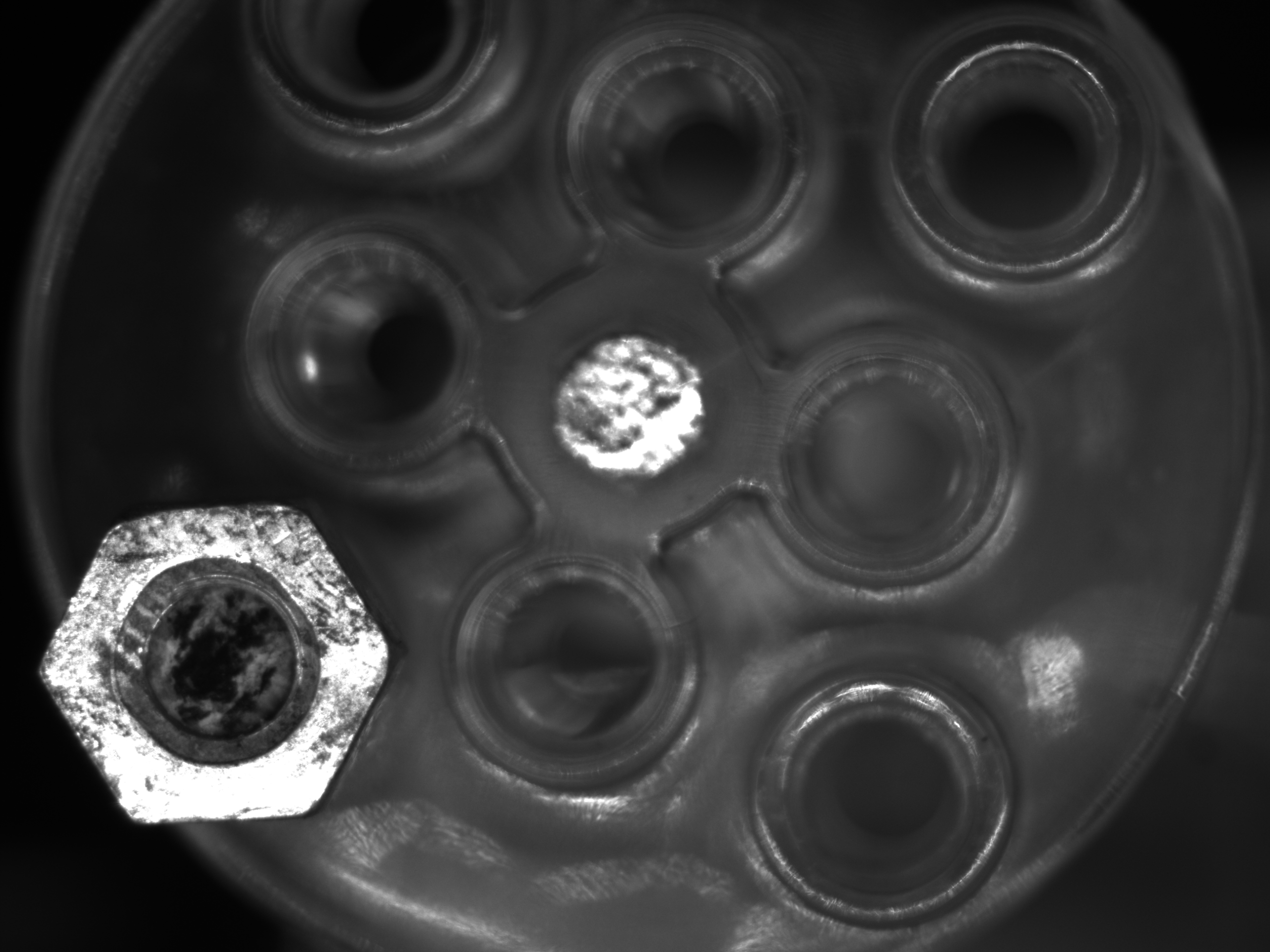

[TestMethod()] public async Task ExecuteSoftwareTriggerTest() { if (!_baslerCameraSample.IsConnected) _baslerCameraSample.Connect(); // Enable software trigger mode. var isEnabled = _baslerCameraSample.SetSoftwareTrigger(true); Assert.IsTrue(isEnabled); // Start streaming. _baslerCameraSample.StartGrabbing(); // Assume some event occurs 1000 ms from now. await Task.Delay(1000); // Measure elapsed time from the moment of the event. var startTime = DateTime.Now; // Fire the software trigger. _baslerCameraSample.ExecuteSoftwareTrigger(); var elapsedTrigger = (DateTime.Now – startTime).TotalMilliseconds; // Example: Elapsed time(Trigger): 5.9921 ms Console.WriteLine($“Elapsed time(Trigger): {elapsedTrigger} ms”); Assert.IsTrue(elapsedTrigger <= 10, “Elapsed time should be less than 10 ms”); // It takes some time until the ImageGrabbed event runs after the trigger. // Use a CancellationToken so that we can cancel the wait when the frame is detected. try { var cts = new CancellationTokenSource(); var waitTask = Task.Delay(1000, cts.Token); while (!waitTask.IsCompleted) { await Task.Delay(1); // Detect frame acquisition via the buffered image queue. if (_baslerCameraSample.BufferedImageQueue.Count > 0) cts.Cancel(); } await waitTask; // If not canceled, no frame arrived within the time limit → treat as failure. Assert.Fail(“OnImageGrabed was not called within the expected time.”); } catch (TaskCanceledException) { // Expected: canceled by OnImageGrabed (frame arrival). } // Stop streaming after receiving the frame. _baslerCameraSample.StopGrabbing(); // Dequeue the captured image, measure the time OnImageGrabed was called, and save the image. Assert.AreEqual(1, _baslerCameraSample.BufferedImageQueue.Count); _baslerCameraSample.BufferedImageQueue.TryDequeue(out var item); var elapsedOnImageGrabed = (item.Item1–startTime).TotalMilliseconds; // Example: Elapsed time(OnImageGrabed): 112.0971 ms Console.WriteLine($“Elapsed time(OnImageGrabed): {elapsedOnImageGrabed} ms”); var bitmap = BaslerCameraSample.ConvertGrabResultToBitmap(item.Item2); var fileName = $“TriggeredCapture_{DateTime.Now:yyyyMMdd_HHmmss}.bmp”; BaslerCameraSample.SaveBitmap(bitmap, fileName); Assert.IsTrue(File.Exists(fileName), $“File {fileName} should be created.”); } |

Here, BufferedImageQueue refers to the queue filled by the OnImageGrabbed event handler

(as implemented in the earlier event-driven capture article).

⏱ Experiment: Software Trigger vs “Just call GrabOne()”

You might wonder:

“What’s the difference between using a software trigger and simply calling

GrabOne()after an event occurs?”

To test this, we extend ExecuteSoftwareTriggerTest to also measure the time taken by a plain GrabOne() call and compare the two.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

[TestMethod()] public async Task ExecuteSoftwareTriggerTest() { // ~~ previous code omitted for brevity ~~ // Disable trigger mode. var isDisabled = _baslerCameraSample.SetSoftwareTrigger(false); Assert.IsTrue(isDisabled); // Measure how long it takes to grab a single frame with GrabOne(). var grabSW = new Stopwatch(); grabSW.Start(); var grabbedImage = _baslerCameraSample.Snap(); grabSW.Stop(); var elapesedGrabOne = grabSW.ElapsedMilliseconds; // Example: Elapsed time(GrabOne): 193 ms Console.WriteLine($“Elapsed time(GrabOne): {elapesedGrabOne} ms”); // Waiting for a trigger while the camera is already streaming // can acquire an image faster than starting a full GrabOne() capture from scratch. // Also, with GrabOne() you don’t know exactly when the trigger (event) occurred, // whereas with a software trigger you can tightly associate the image with the trigger timing. Assert.IsTrue(elapsedOnImageGrabed < elapesedGrabOne); } |

📊 Results

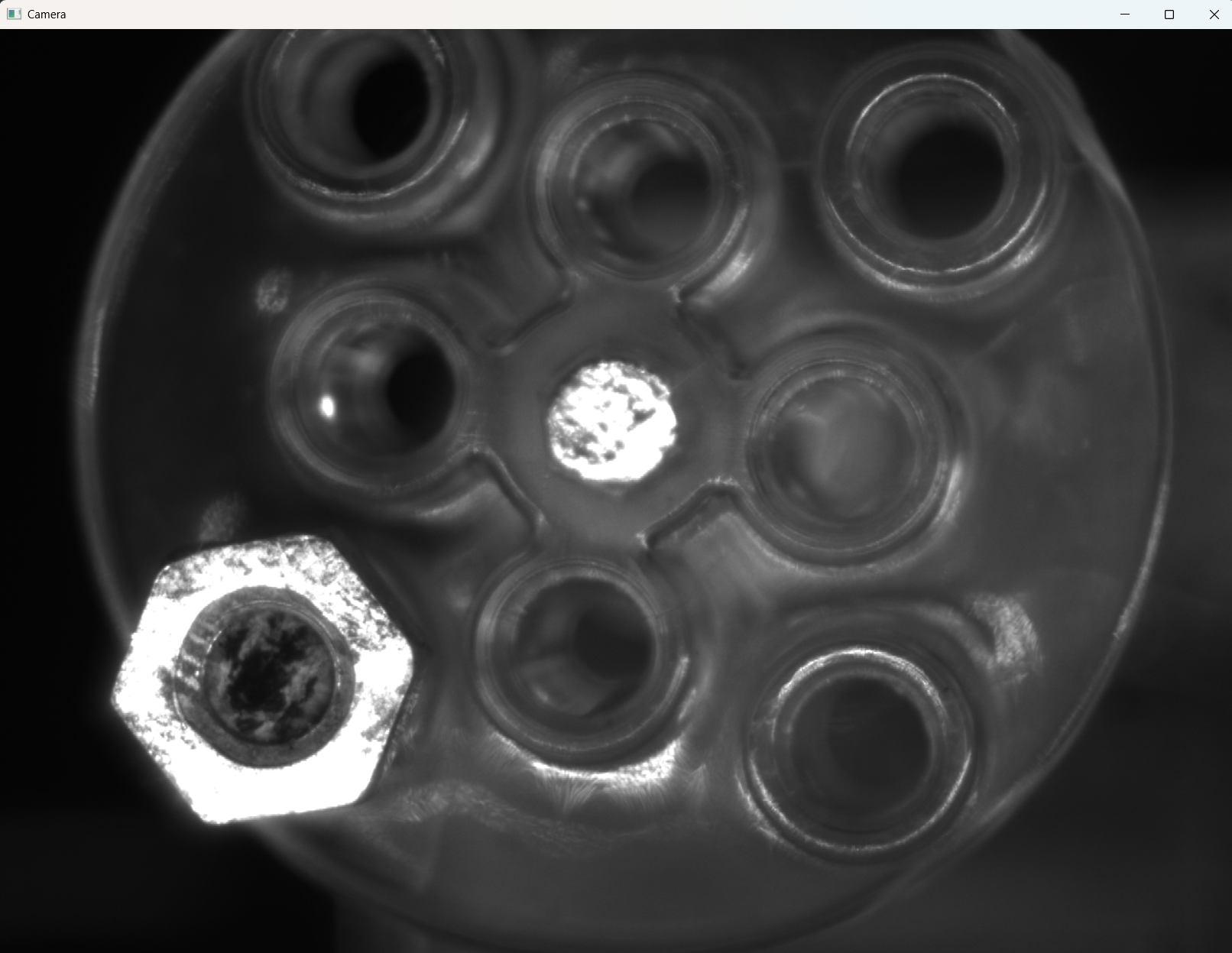

On my test environment (Basler acA2500-14gm, GigE, AMD Ryzen 9, Windows),

software-trigger capture achieved a faster time-to-image than a plain GrabOne().

This is likely because the camera is already streaming and armed, so the sensor doesn’t need to be restarted for each frame.

Summary:

| Mode | Acquisition Time | Characteristics |

|---|---|---|

| Software trigger | ~112 ms | Faster image acquisition; precise knowledge of when the trigger occurred |

| GrabOne | ~193 ms | Simple to implement; trigger timing is not strictly known |

📝 Summary

-

Software trigger is ideal when you want to capture semantically meaningful moments:

- e.g., synchronization with experimental data, test equipment, or external signals

-

Compared to

GrabOne():- Software trigger can reduce the delay between event and image acquisition

- It also lets you know exactly when the trigger was fired

In the next article, we’ll look at how to display camera images in WPF, using the same design that I’m adopting in my in-development camera configuration management tool.

Author: @MilleVision

🛠 Full Sample Code Project(With Japanese comment)

All sample code introduced in this Qiita series is available as a C# project with unit tests on BOOTH:

- Includes unit tests for easy verification and learning

- Will be updated alongside new articles